Dangle you ever damaged-down an application called Chat & Ask AI? If this is the case, there may be a accurate likelihood your messages were exposed final month. In January, an self ample researcher used to be in a situation to easily access some 300 million messages on the service, in keeping with 404 Media's Emanual Maiberg. The tips incorporated chat logs linked to each form of aloof topics, from drug spend to suicide.

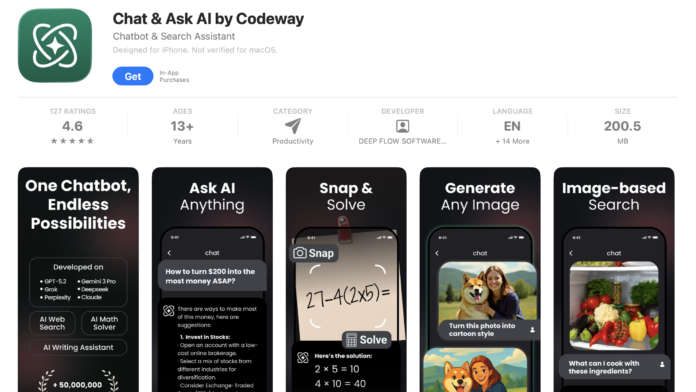

Chat & Ask AI, an app supplied by the Istanbul-essentially based firm Codeway that is on hand on each Apple and Google app shops, claims to admire around 50 million users. The application truly resells access to substantial language models from other companies, including OpenAI, Claude, and Google, providing restricted free access to its users.

The tell that consequence within the recordsdata leak used to be linked to an alarmed Google Firebase configuration, a lovely total vulnerability. The researcher used to be simply in a situation to impact himself an “authenticated” particular person, at which level he may maybe read messages from 25 million of the app's users. He reportedly extracted and analyzed around 60,000 messages sooner than reporting the matter to Codeway.

The accurate knowledge: The matter used to be rapid patched. More accurate knowledge: there had been no reports of those messages leaking to the broader web. Unruffled, here's yet one other reason to carefully grasp into consideration the types of messages you send AI chatbots. Take into account, conversations with AI chatbots must now not deepest—by their nature, these programs typically place your conversations to “bear in mind” them later. In the case of a knowledge breach, that can maybe potentially consequence in embarrassment, or worse—and the spend of an reseller adore Chat & Ask AI to access substantial language models provides one other layer of doable safety risks, as this fresh leak demonstrates.